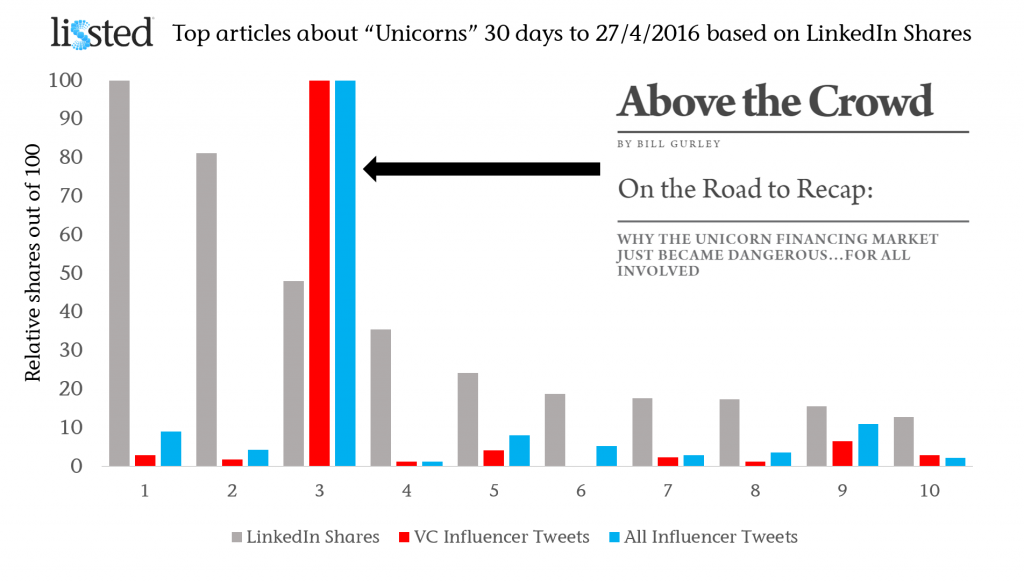

Twitter shares by influential individuals and organisations could be harnessed in an automated news content rating system.

This system could assist Facebook in identifying articles that have a high risk of being fake. The methodology is based on a journalistic verification model.

Examples: the model would have rated as high risk:

-FINAL ELECTION 2016 NUMBERS: TRUMP WON BOTH POPULAR ( 62.9 M -62.2 M ) – about the election results. It was ranking top of Google for a search for “final election results” earlier this week and has had over 400,000 interactions on Facebook. It was identified as fake (obviously) by Buzzfeed.

– ‘Pope Francis Shocks World, Endorses Donald Trump for President, Releases Statement‘. Shared nearly 1 million times on Facebook. Now taken down, having been reported as fake by The New York Times

The rating system described below is subject to patent pending UK 1619460.7.

At the weekend Mark Zuckerberg described as “pretty crazy” the idea that sharing fake news on Facebook contributed to Donald Trump being elected President.

He went on to say in a Facebook post:

“Of all the content on Facebook, more than 99% of what people see is authentic. Only a very small amount is fake news and hoaxes. The hoaxes that do exist are not limited to one partisan view, or even to politics. Overall, this makes it extremely unlikely hoaxes changed the outcome of this election in one direction or the other.”

“That said, we don’t want any hoaxes on Facebook. Our goal is to show people the content they will find most meaningful, and people want accurate news. We have already launched work enabling our community to flag hoaxes and fake news, and there is more we can do here. We have made progress, and we will continue to work on this to improve further.”

Yesterday, Business Insider reported a group of students had hacked together a tool that might help.

I think part of the answer lies in another social network, Twitter.

An important aside

It’s important to note the topic of “fake” news is not black and white. For example, parody accounts and sites like The Onion are “fake news” that many people enjoy for the entertainment they provide.

There’s also the question of news that is biased, or only partially based in fact.

The idea proposed below is simply a model to identify content that is:

1. more likely to be fake; and

2. is generating a level of interaction on Facebook that increases the likelihood of it being influential.

Verification and subsequent action would be for a human editorial approach to decide.

Using Twitter data to identify potentially fake news

In its piece on Zuckerberg’s comments, The New York Times highlighted this article ‘Pope Francis Shocks World, Endorses Donald Trump for President, Releases Statement‘ (now removed) that had been shared nearly a million times on Facebook. It’s fake. This never happened.

If it had been true it would obviously have been a big story.

As such you’d expect influential Trump supporters, Republicans and other key right wing media, organisations and individuals to have been falling over themselves to highlight it.

They weren’t.

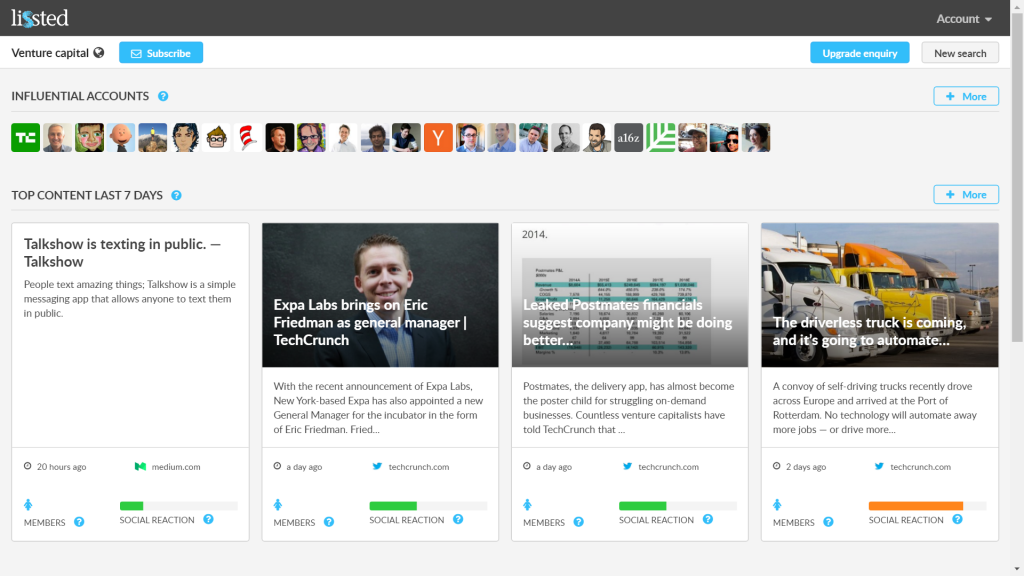

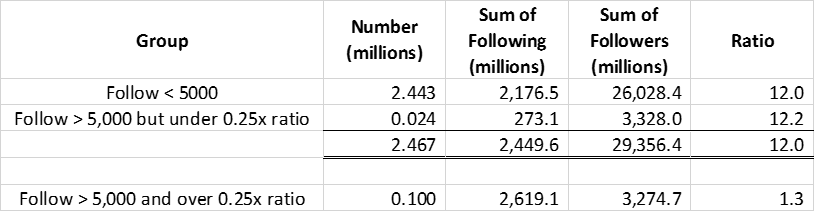

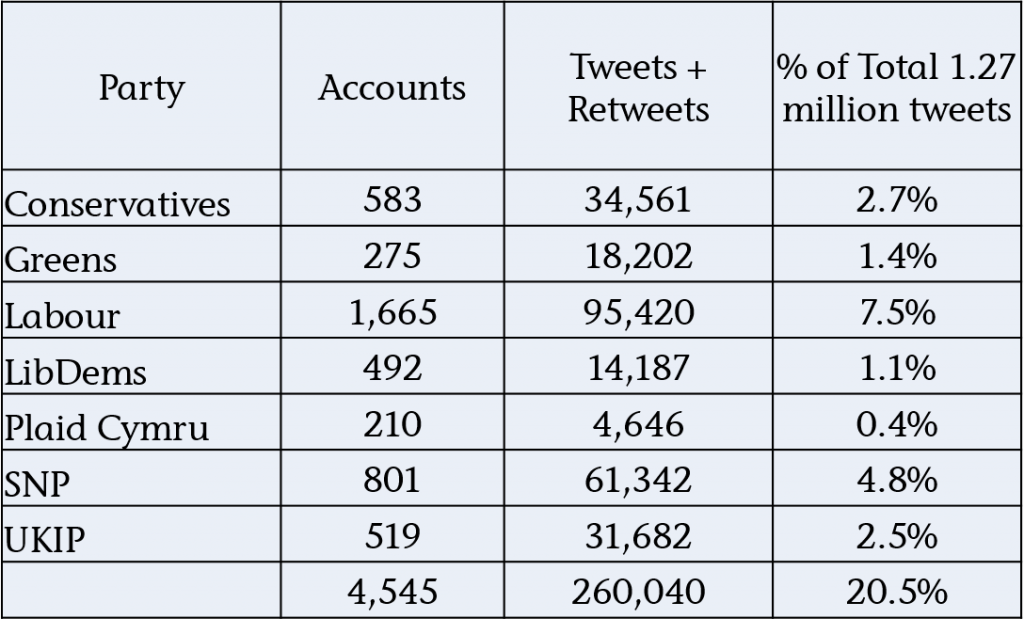

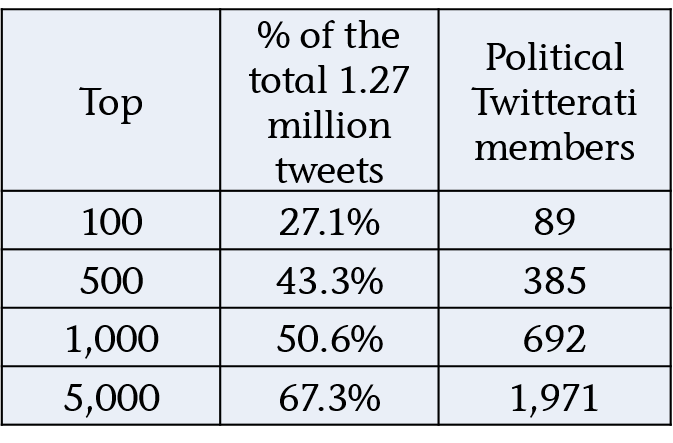

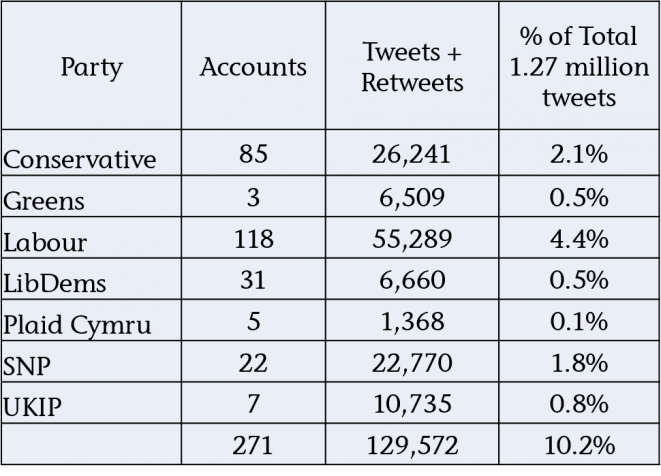

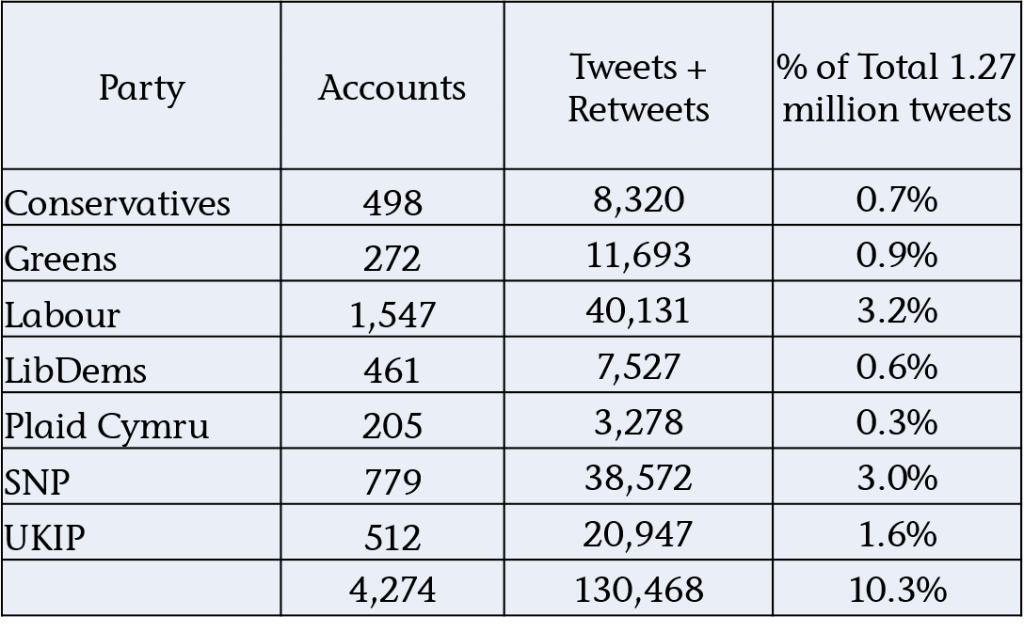

Lissted tracks the Twitter accounts of over 150,000 of the most influential people and organisations. This includes over 8,000 key influencers in relevant communities such as Republicans and US Politics, as well as potentially sympathetic ones such as UKIP and Vote Leave.

Of these 150,000+ accounts only 6 shared the article.

Extending the analysis

Lissted has indexed another 106 links from the same domain during the last 100 days.

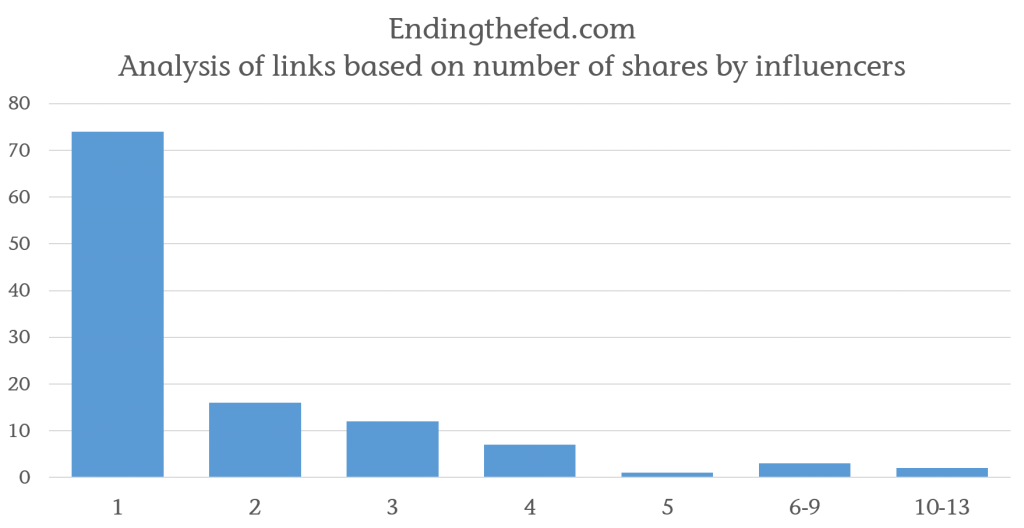

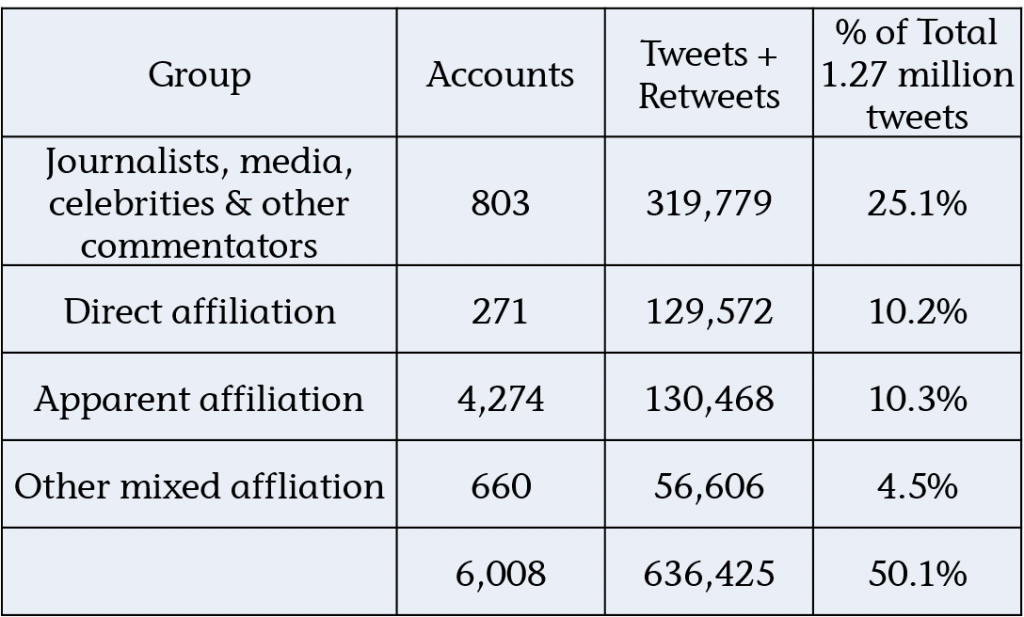

The graph below shows analysis of these links based on how many unique influencer shares they received.

You can see that 74 of the 107 links (including the Pope story) were only shared by a single member of the 150,000 influencers we track. Only 5 have been shared by 6 or more and that includes the Pope story.

That’s just 196 influencer shares in total across the 107 links.

Yet, between them these URLs have been interacted with 12.1 million times on Facebook.

And of course these are the stories that have been shared by an influencer. There could be more that haven’t been shared at all by influential Twitter users.

Lissted’s data also tells us:

– 133 of the 150,000 influencers (less than 0.1%) have shared at least one of its articles; and

– the article published by the site that has proved most popular with influencers has received 10 shares.

How could this help identify high risk news?

You can’t identify fake news based simply on levels of reaction, nor based on analysing what they say. You need a journalistic filter. Twitter provides a potential basis for this because its data will tell you WHO shared something.

For example, Storyful, the Irish social media and content licensing agency, has used Twitter validation by specific sources as a way of identifying content that is more likely to be genuine.

I don’t know why very few of the influencers Lissted has been tracking shared the piece. But my suspicion would be that as influential members of their communities they’re:

– capable of spotting most fake news for what it is, and/or

– generally less likely to share it as even when it serves their purpose they know that they could be called out for it (they’re more visible and they’ve got more to lose); and /or

– less likely to be exposed to it in the first place.

Obviously, not all content will be shared on Twitter by these 150,000 accounts. But you can bet your bottom dollar that any vaguely significant news story will be. The temptation to want to highlight a genuine story is just too great.

Comparison to example of genuine content

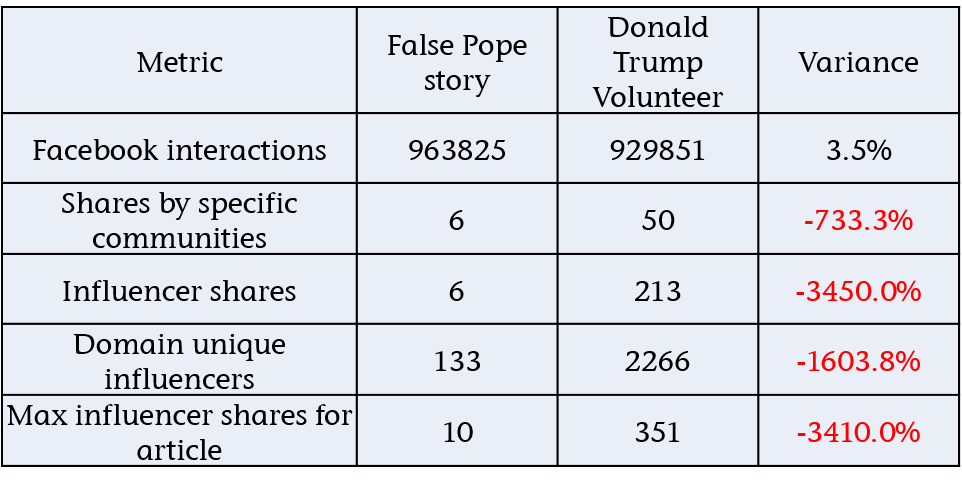

To give the Pope story numbers some context, the table below shows a comparison to this piece on the Donald Trump website – Volunteer to be a Trump Election Observer (NB: post victory the URL now redirects to the home page).

Both URLs have similar Facebook engagement, but there’s a huge difference in the influencer metrics for the article and the domain.

This is just one example though. If we build a model based on this validation methodology does it provide a sound basis for rating content in general?

NB: the model that follows focuses on content from websites. A similar, approach could be applied to other content e.g. Facebook posts, YouTube videos etc.

Proof of concept

To test the methodology I built a rating model and applied it to three sets of data:

1. The 107 links identified from endingthefed.com – data here.

2. Links that Newswhip reported as having 250,000+ Facebook interactions in the period 15/9/16 – 14/11/16 – data here.

3. A random sample of over 3,000 links that were shared by influencers from the specific communities above in the period 15/10/16 -14/11/16 – data here.

The rating model gives links a score from 0 – 100. With 100 representing a links that has a very high risk of being fake and zero being a very low risk.

To rate as 100 a link would need to have:

– received 1,000,000 Facebook interactions; and

– be on a site that has never been shared by one of the 150,000 influencers, including the link itself.

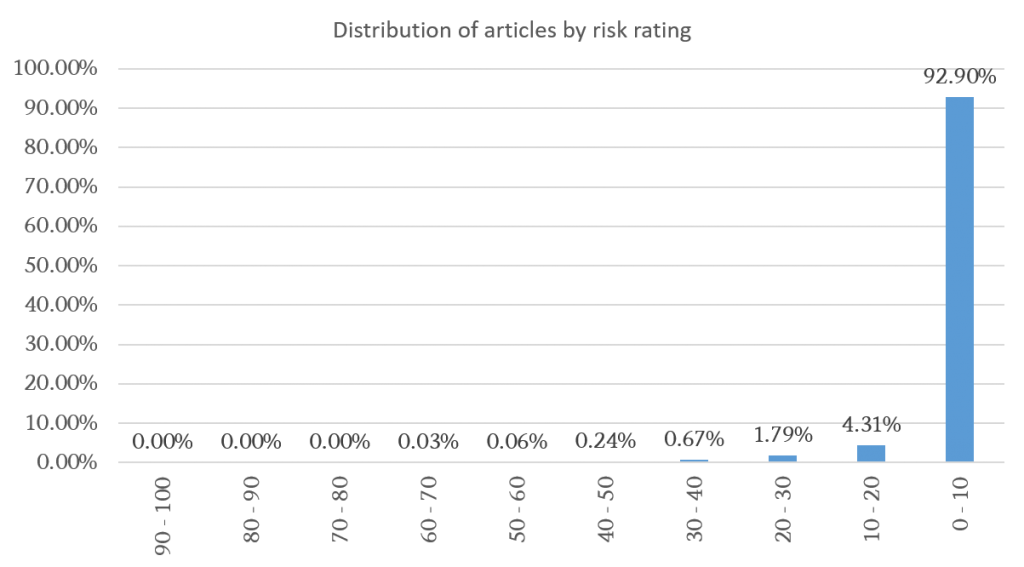

The distribution of rating for the random sample is as follows:

Mark Zuckerberg’s commented that less than 1 per cent of content on Facebook is fake. If we look at the distribution we find that 1 per cent corresponds to a score of 30+.

The distribution also shows that no link in the sample scored more than 70.

Finally over 90 per cent of URLs rated at less than 10.

On this basis I’ve grouped links in the three data sets above into 4 risk bands:

Exceptional – 70+

High – 30 -70

Medium – 10 – 30

Low – 0-10

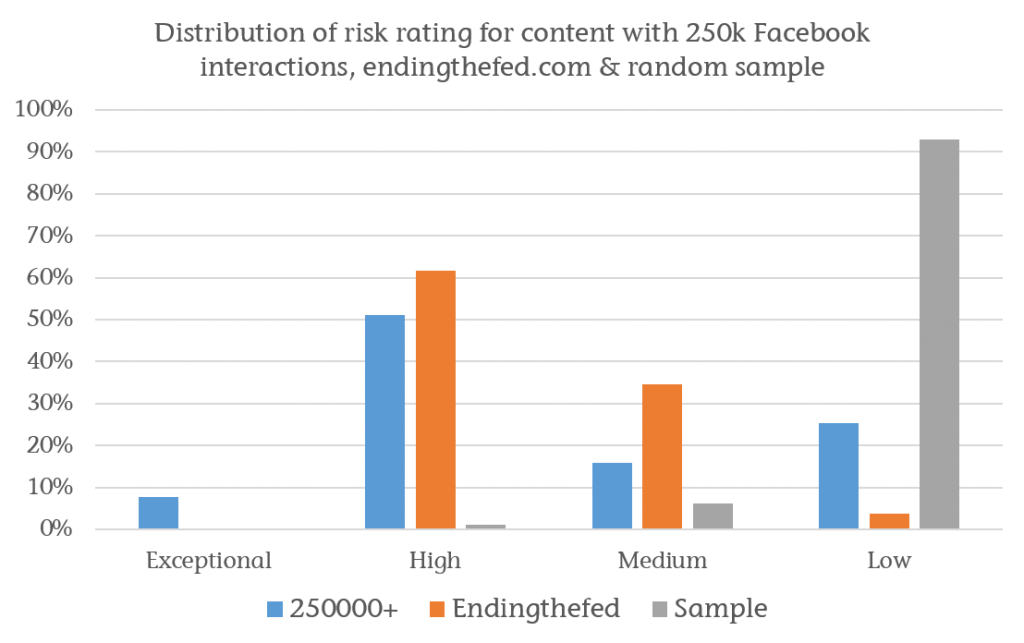

Applying these bands to the three sets gives:

Unsurprisingly a high proportion of the 250,000+ group are rated as Medium to Exceptional risk. This reflects the fact that there are so few of them – 182 – and the implicit risk of being influential due to their high engagement.

Verifying these would not be a huge drain on resources as that translates to just 2 or 3 links per day!

The graph also shows how high risk the endingthefed site is with over 95 per cent of its content rated as High or Medium.

HEALTH WARNINGS

1. Being ranked as medium – exceptional risk does NOT mean the content is fake. It is simply an indicator. Just because one article on a site is fake does not mean that all the risky content is.

Also an article could be genuine viral content that’s come out of the blue from a new source.

The value in the model is its ability to identify the content that needs verifying the most. Such verification should then be done by professional journalists.

2. The rankings only reflect the 150,000 individuals and organisations that Lissted currently tracks. There could be communities that aren’t sufficiently represented within this population.

This isn’t a flaw in the methodology however, just the implementation. It could be addressed by expanding the tracking data set.

Example findings

The top 10 ranked articles in the 250,000+ group are as follows:

1. Mike Pence: ‘If We Humble Ourselves And Pray, God Will Heal Our Land’ (506k Facebook interactions, 0 influencer shares)

2. Just Before the Election Thousands Take Over Times Square With the Power of God (416k Facebook interactions, 0 influencer shares)

3. TRUMP BREAKS RECORD in Pennsylvania “MASSIVE CROWD FOR TRUMP! (VIDEO) – National Insider Politics (207k Facebook interactions, 0 influencer shares)

4. SUSAN SARANDON: CLINTON IS THE DANGER, NOT TRUMP – National Insider Politics (273k Facebook interactions, 0 influencer shares)

5. FINGERS CROSSED: These 11 Celebrities Promised To Leave America If Trump Wins (455k Facebook interactions, 1 influencer share)

6. Trump: No Salary For Me As President USA Newsflash (539k Facebook interactions, 0 influencer shares)

7. I am. (454k Facebook interactions, 1 influencer share)

8. A Secret Has Been Uncovered: Cancer Is Not A Disease But Business! – NewsRescue.com (336k Facebook interactions, 0 influencer shares)

9. The BIGGEST Star Comes Out for TRUMP!! Matthew McConaughey VOTES Trump! (294k Facebook interactions, 1 influencer share)

10. Chicago Cubs Ben Zobrist Shares Christian Faith: We All Need Christ (548k Facebook interactions, 1 influencer share)

My own basic verification suggests some of these stories are true. For instance Donald Trump did indeed say that he would not draw his Presidential salary.

However the Matthew McConaughey story is false and by the article’s own admission the Pennslyvania rally image is from April not October, plus there are no details on what “records” have been broken.

From outside the top 10 this post, rated as high risk, FINAL ELECTION 2016 NUMBERS: TRUMP WON BOTH POPULAR ( 62.9 M -62.2 M ) about the election results was ranking top of Google for a search for “final election results” earlier this week. It was identified as fake by Buzzfeed.

It would be great if any journalists reading this would go through the full list of articles rated as high risk and see if they can identify any more.

Equally if anyone spots URLs rated as low risk that are fake please let me know.

Further development

This exercise, and the mathematical model behind it, were just a rudimentary proof of concept for the methodology. An actual system could:

– utilise machine learning to improve its hit rate;

– flag sites over time which had the highest inherent risk of fake content;

– include other metrics such as domain/page authority from a source such as Moz.

Challenge to Facebook

A system like this wouldn’t be difficult to setup. If someone (Newswhip, BuzzSumo etc) is willing to provide us with a feed of articles getting high shares on Facebook, we could do this analysis right now and flag the high risk articles publicly.

Snopes already does good work identifying fake stories. I wonder if they’re using algorithms such as this to help? If not then perhaps they could.

Either way, this is something Zuckerberg and Dorsey could probably setup in days, hours perhaps!